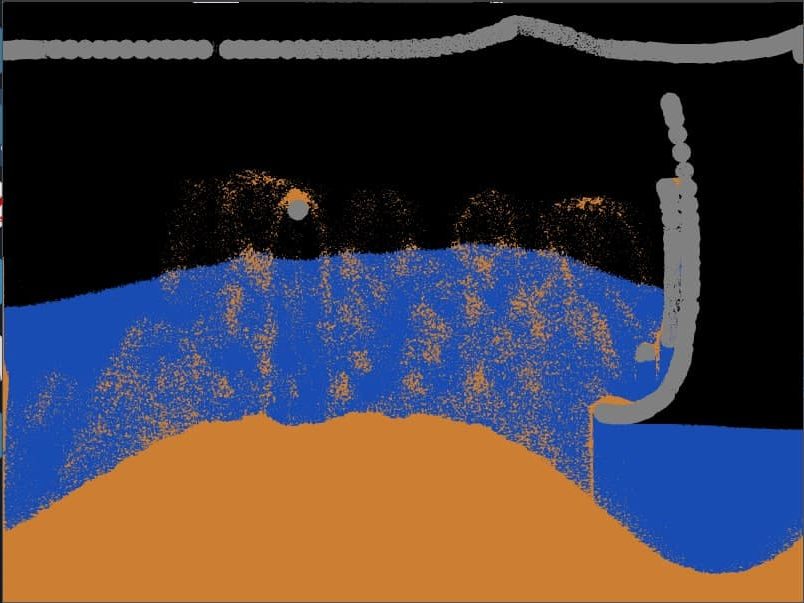

Particle simulation inspired from the game called “Noita”. There are currently 6 particle types. These are sand, water, stone, fire, smoke and steam. This is the first DirectX 12 project I have made. Source code is avaible on github. Please check the demo out. It is very satisfying to play.

Source Code: GitHub

Demo: Google Drive

Some Technical Details

- This project was written purely in DirectX 12/C++. Nsight Graphics was used as graphics debugging tool.

- Every pixel in the game represents a particle. Screen dimensions are 800×600. So there are 480.000 particles in total.

- All the particles are stored in vector<Particle> worldData.

- There is also a vector of Color32 objects that contains the color information of each pixel. This vector is later uploaded into a texture and sent to the GPU. And the scene is rendered with a single draw call using that texture. The texture is drawn as a single quad that covers entire screen.

- Here are some rules of how the particles are moving:

- Sand particle can move downwards if there is empty pixel below. Otherwise they move to bottom-rigth or bottom-left. If there is a water particle beneath, they swap locations. So it emulates sand sinking in water.

- Water particle can do what sand can do but they also move straight right or left.

- Stone particle doesn’t move and can’t be destroyed.

- Fire particle changes color depending on its lifetime and if contacts with a water particle both particles are destroyed and steam particle is created.

- Smoke particle is created by fire particle and moves exactly like sand particle but in inverted Y axis. So it moves upwards.

- Steam particle is like smoke particle but has different color and will be created when fire contacts water particle.

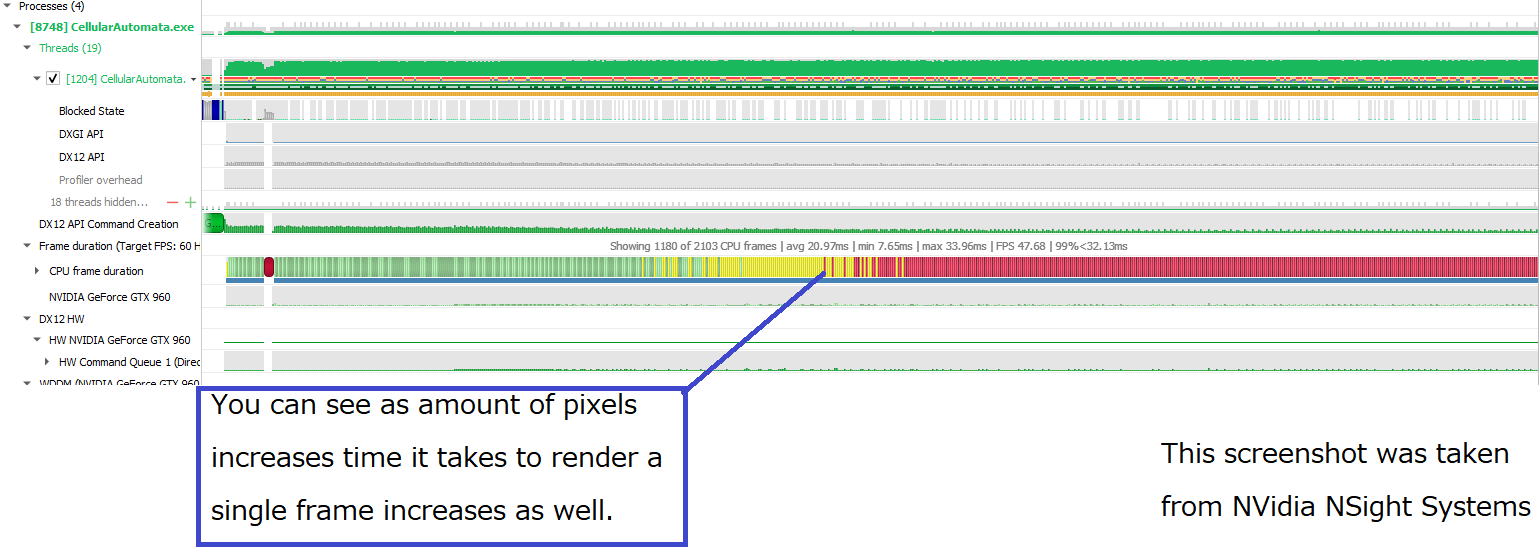

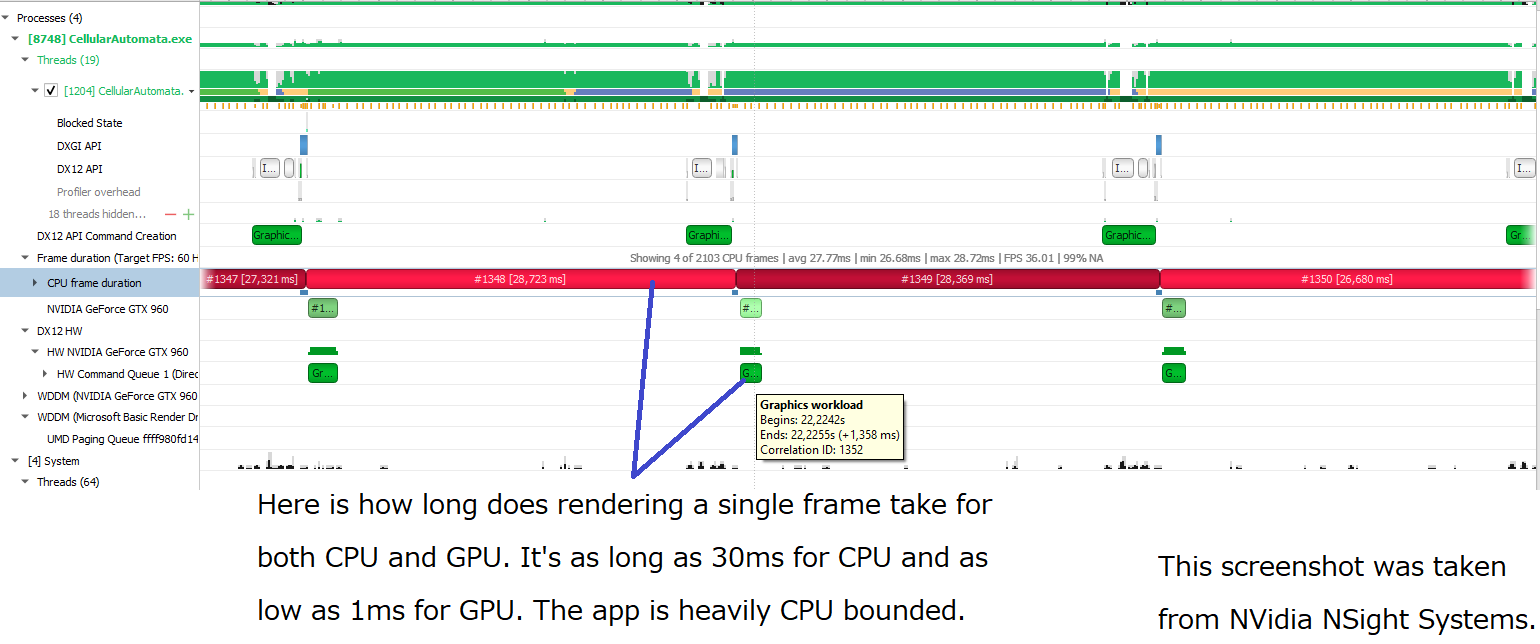

- There are some concerns regarding to performance. We are simulating the particles in the CPU. And all GPU does is rendering a single quad that covers entire screen. So the simulation is heavily CPU bounded.

How Can It Be Improved?

Every frame we iterate through every pixel in the texture to render a scene. And the first issue with that approach is that this process is done even for the part of the texture there is no change of. Let’s call these parts “inactive”. So at the beginning of the application the only thing we see is a black screen and every part in the scene is inactive. That means we don’t need to iterate over the texture if no change is detected since last frame. With that in mind, here is a couple of improvements we can make.

First optimization we can make is to divide the screen into multiple chunks and set the chunk state to “active” if there is a pixel that needs its state to be changed. Then every frame only render the active chunks and skip over the inactive chunks. That would reduce the pixels we have to iterate over and improve performance a lot.

Another improvement we can make is rendering the scene in a multithreaded fashion. We can iterate over multiple chunks at a time to reduce the time it takes to render a single frame. That would also increase the performance but increase the complexity of the game.

The final improvement I can think of is that there may be a way to compute simulation in the GPU. Possibly in the compute shader. Though I’m not sure how this could work or how it can be implemented.